Locking without false sharing

In the previous episode, we peered into how locks are implemented in Java and saw an instance of LockSupport.park() being a bottleneck. However, that is not the only subtle bottleneck with locks. There is one pathology that ails all locks in java.util.concurrent.locks, spinlock and synchronized blocks regardless of queue capacity.

The problem is called false sharing, and to be fair, it is not a JDK bug. This is a subtle issue and like the LockSupport.park() situation from before, typical applications will have worse bottlenecks. Avoiding false sharing also has requires higher memory usage and will decrease data locality.

Today we are going to look into the false sharing problem, how to solve it, how to combine it with park and how to confirm it exists.

False sharing

Like the opening image on this post hints, false sharing consists of two or more threads each writing to an address which is not shared by any other thread. However, despite distinct, these addresses are so near to one another that they map to the same cache line. That is, the threads write to unique addresses, but like a power strip, are sharing a single cache line (or power socket).

To make things simple, pretend the Java compiler forgot how to optimize code and consider the following program:

public class Example {

private class C implements Runnable {

int value;

public void run() {

// pretend this won't be compiled as "value += 1_000_000_000";

for (int i = 0; i < 1_000_000_000; ++i)

++value;

}

}

public static void main(String[] args) throws Exception {

C c0 = new C(), c1 = new C();

Thread t0 = Thread.ofPlatform().start(c0);

Thread t1 = Thread.ofPlatform().start(c1);

t0.join();

t1.join();

}

}Just like the cloud is just someone else’s computer, memory is just a big array of bytes. Just after main instantiates c0 and c1, there will be some region of memory (0x40001000 in this example) where both objects will be allocated. This is not part of the Java Virtual Machine Spec, but OpenJDK (assuming a heap smaller than 32GiB) and its variants will allocate objects contiguously within the current TLAB (Thread Local Allocation Buffer) which is unlikely to be exhausted right after c0 gets instantiated:

+--------------------+-----------+-----------+-------------------+-----------+-----------+

| obj header for c0 | c0.value | [padding] | obj header for c1 | c1.value | [padding] |

| (16 bytes) | (4 bytes) | (4 bytes) | (16 bytes) | (4 bytes) | (4 bytes) |

+--------------------+-----------+-----------+-------------------+-----------+-----------+

0x40001000 0x40001010 0x40001014 0x40001018 0x40001028 0x4000102cContinuing with simplifications, assume t0 and t1 get scheduled to core 0 and core 1, respectively. In order to execute ++value, both cores will load their non-shared c0.value and c1.value into registers. Since main memory (RAM) is slower than CPUs, all reads (loads) and writes (stores) go through a cache hierarchy. The first, smallest and fastest level is L1, and the last, biggest and slowest level is LLC (Last-Level Cache). Modern x86/x86-64 CPUs have L1, L2 and L3 caches. L1 is always local to each core and L3 is always shared by all cores in a socket.

Assuming time stopped after core 0 loaded c0.value into the EAX register and before core 1 loaded c1.value but after core 1 had fetched data into its L1 cache, the state of both cores could be represented as follows:

Core 0 Core 1

+--------------------------------+ +--------------------------------+

| eax: 0 | | eax: |

| +---------------------------+ | | +---------------------------+ |

| | L1 cache | | | | L1 cache | |

| | 0x40001000-0x40001040 | | | | 0x40001000-0x40001040 | |

| | · | | | | · | |

| | · | | | | · | |

| | · | | | | · | |

| +---------------------------+ | | +---------------------------+ |

+--------------------------------+ +--------------------------------+Notice that despite reading distinct and unique addresses (c0.value at 0x40001010) and (c1.value at 0x400011028), both cores each have a copy of the same 64-byte region that starts at 0x40001000 in their L1 caches. This is not yet an instance of the false sharing problem.

The problem starts once core 0 has computed the increment and issues a store instruction to update the value at 0x40001010 to 1. This will be executed in the L1 cache local to core 0, since writing to actual RAM is too expensive. However, core 0 cannot simply update its own private view of 0x40001010 and keep silent. To make parallel and concurrent programming possible, all other cores must know that something changed.

Core 0 Core 1

+--------------------------------+ +--------------------------------+

| eax: 1 | | eax: |

| +---------------------------+ | | +---------------------------+ |

| | L1 cache | | | | L1 cache | |

| | ! 0x40001000-0x40001040 | | invalidate | | | |

| | · | | 0x40001000 | | · | |

| | · | +----------->| | · | |

| | · | | | | · | |

| +---------------------------+ | | +---------------------------+ |

+--------------------------------+ +--------------------------------+This is a simplification. Cache invalidation protocols are both an implementation detail and a trade secret.

Since address 0x40001028 is no longer present in core 1’s L1 cache, the core must fetch those 64 bytes from upper levels before continuing with the increment. Once core 1 writes to 0x40001028, it will then invalidate core 0’s L1-cached value for 0x40001010. Both cores will continue making each other stop and refresh its cache continuously. This is the false sharing problem.

Since this example uses plain reads and writes to C.value, both cores are allowed to make computations with stale data. Depending on when a core knows it used data invalidated by another core, it may decide to simply go on using potentially outdated data. This is fine because the memory model blames the programmer which did not use atomic operations or memory fences.

That is not the case for locks, which rely on atomic operations. If a core is executing LOCK.compareAndExchangeAcquire(this, 0, 1) and detects a cache invalidation, it must either check if the 4 bytes it read are still 0 or it must restart the whole atomic operation.

Keep in mind that, with a single lock, there is no false sharing. A lock is meant to be shared by threads to control access to a critical region. However, a lock can be subject to false sharing when objects instantiated close to the lock are used by a thread that is not using the lock. For example, the main thread may instantiate a lock to be shared between threads 1 and 2 and then instantiated an array to be used by thread 3. There will be false sharing between thread 3 and threads 1 and 2, but there is no false sharing between threads 1 and 2.

False sharing protection

Avoiding false sharing in C/C++ is easy: align object/struct allocations to 128 bytes. Intel and AMD use 64-byte cache lines and Intel strategy for prefetching into L2 cache involves prefetching two adjacent cache lines when either of them is accessed (cf. section 3.7.3, “hardware prefetching for second-level cache” of Intel Optimization Reference Manual).

Unfortunately (or fortunately depending on the viewpoint), there is no way for applications to control memory alignment in the JVM. But while allocations cannot be aligned to 128 bytes, adding 128 bytes before an object starts and 128 bytes after it ends will insulate the fields squeezed in between from false sharing.

One cannot simply write private byte bXXX 128 times and call it a day. There is no obligation for the JVM to represent object fields in the same order that they are declared in the Java source code. Some ordering can be enforced via inheritance. In order to make the implementations of sun.misc.Unsafe or jdk.internal.misc.Unsafe viable, JVM implementers are cornered into placing all fields declared by a superclass before all the fields declared by the subclass.

The reason is that

Unsafe.objectFieldOffset(Class, String)must return the same value whether the Class is the class that declares the field or a subclass. Such offsets are used to perform unsafe reads and writes given an Object reference and an offset into that object or array.

The inheritance trick applied to the C class of the example above yields this:

public static class C extends C3 { } // code continues using new C()

static class C3 extends C2 { // 128 byte fields placed after value

private byte b000, b001, b002, b003, b004, b005, b006, b007;

private byte b008, b009, b010, b011, b012, b013, b014, b015;

private byte b016, b017, b018, b019, b020, b021, b022, b023;

private byte b024, b025, b026, b027, b028, b029, b030, b031;

private byte b032, b033, b034, b035, b036, b037, b038, b049;

private byte b040, b041, b042, b043, b044, b045, b046, b047;

private byte b048, b049, b050, b051, b052, b053, b053, b055;

private byte b056, b057, b058, b059, b060, b061, b062, b063;

private byte b064, b065, b066, b067, b068, b069, b070, b071;

private byte b072, b073, b074, b075, b076, b077, b078, b079;

private byte b080, b081, b082, b083, b084, b085, b086, b087;

private byte b088, b089, b090, b091, b092, b093, b094, b095;

private byte b096, b097, b098, b099, b100, b101, b102, b103;

private byte b104, b105, b106, b107, b108, b109, b110, b111;

private byte b112, b113, b114, b115, b116, b117, b118, b119;

private byte b120, b121, b122, b123, b124, b125, b126, b127;

}

static class C2 {

int value; // protected against false sharing

}

static class C1 { // 128 byte fields will be placed before value

private byte b000, b001, b002, b003, b004, b005, b006, b007;

private byte b008, b009, b010, b011, b012, b013, b014, b015;

private byte b016, b017, b018, b019, b020, b021, b022, b023;

private byte b024, b025, b026, b027, b028, b029, b030, b031;

private byte b032, b033, b034, b035, b036, b037, b038, b049;

private byte b040, b041, b042, b043, b044, b045, b046, b047;

private byte b048, b049, b050, b051, b052, b053, b053, b055;

private byte b056, b057, b058, b059, b060, b061, b062, b063;

private byte b064, b065, b066, b067, b068, b069, b070, b071;

private byte b072, b073, b074, b075, b076, b077, b078, b079;

private byte b080, b081, b082, b083, b084, b085, b086, b087;

private byte b088, b089, b090, b091, b092, b093, b094, b095;

private byte b096, b097, b098, b099, b100, b101, b102, b103;

private byte b104, b105, b106, b107, b108, b109, b110, b111;

private byte b112, b113, b114, b115, b116, b117, b118, b119;

private byte b120, b121, b122, b123, b124, b125, b126, b127;

}Future JVM implementations might elide the unused fields. However, the fact that this technique it is employed by JMH and JCTools, might discourage such pull request.

Parking optimized for SPSC

ReentrantLocks are not immune to false sharing but yhey are immune to code changes by nosy programmers. Copying all its code out of the JDK is too much work for a blog post. But given the benchmark is evaluating a Single-Producer, Single-Consumer scenario, we can use that constraint to build a simple waiting implementation on top of the spinlock-based SpinQueue.

Such park()-enabled queue will be named SPSCQueue and will need all fields of the SpinQueue plus two Thread references to the potentially parked consumer and producer threads.

private static final VarHandle LOCK; // atomic access to plainLock

@SuppressWarnings("unused") private int plainLock; // same as in SpinQueue

private final int[] data; // same as in LockQueue and SpinQueue

private int readIdx, size; // same as in LockQueue and SpinQueue

private @Nullable Thread consumer, producer; // newThe put implementation must write to the producer field before it parks. This is how a future take or poll will know it needs to be unparked. Likewise, put must consume the consumer reference after it enqueues an item and before it releases the lock. Finally, after unlocking and before returning, put must unpark the parked consumer thread.

@Override public void put(int value) {

while (true) {

Thread unpark = null;

while ((int) LOCK.compareAndExchangeAcquire(this, 0, 1) != 0)

Thread.onSpinWait(); // behave as a spinlock

boolean locked = true;

try {

if (this.size >= data.length) { // no space, must wait

if (producer == null) { // will be true for SPSC

producer = currentThread(); // tell take() we are parked

LOCK.setRelease(this, 0);

locked = false;

LockSupport.park(); // releases the CPU

} // else: not single-producer, degrade to busy-wait

} else {

data[(readIdx+size)%data.length] = value;

++size;

unpark = consumer; // "consume" the consumer Thread reference

consumer = null; // every park() needs only one unpark()

break;

}

} finally {

if (locked) // only release the lock if this thread owns it

LOCK.setRelease(this, 0);

LockSupport.unpark(unpark); // does nothing if unpark == null

}

}

}The implementations for take, offer and poll are analogous. The complete implementation is in the SPSCQueue file

Detecting/measuring false sharing

If there is clear suspect and the complexity of adding the required padding is acceptable, benchmarking padded vs non-padded should be the preferred test. However, such comparison will only test whether padding improves performance. Even with false sharing present, the padding might not yield any benefit if there are other worse bottlenecks or if there is experimental variance above the effect caused by the false sharing.

We can continue using the same jmh repository of the previous episode. To enable comparison of implementations PaddedSpinQueue is a copy of SpinQueue with the 128-byte padding on each side and PaddedSPSCQueue is the equivalent for the SPSCQueue introduced above.

False sharing becomes an issue when threads frequently read and write to inadvertently shared cached lines. The most vulnerable scenario for false sharing is when both the producer and the consumer are always running, and they are contended by a single-slot queue. As discussed in the last post, each benchmark corresponds to a benchmarked thread paired to a non-benchmarked one that executes either a put or a take to counter the operation benchmarked. By setting the spinningCounterpart parameter to true, the counterpart thread will spin on a non-waiting operation (offer or poll) creating such maximum contention scenario:

./mvnw package && java -jar target/benchmarks.jar LockingWithoutLock.queue \

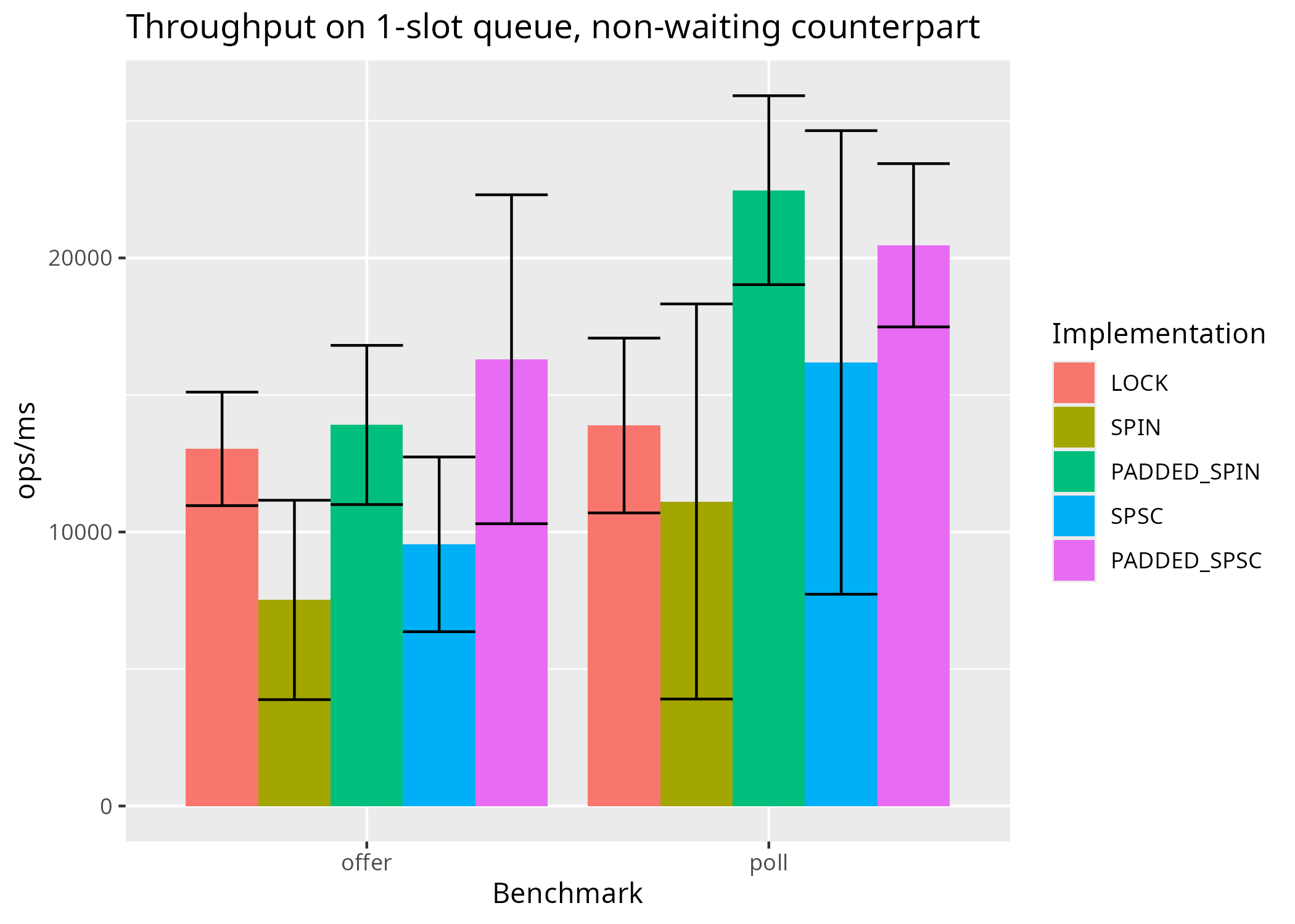

-p capacity=1 -p spinningCounterpart=false,true -rff CSV -rff jmh.csvWith some fondling on R, the results from my machine (log here) yield this plot benchmarking both sides of an offer/poll SPSC:

For SpinQueue, padding does improve average throughput of poll and although confidence intervals touch for offer, padding is justifiable, provided that the use such level of busy-waiting is itself justifiable. For SPSCQueue, there is a weak indication that padding improves throughput, but the results are not statistically significant. Another way to look at these results is that there are other factors at play that deserve more attention than the false sharing.

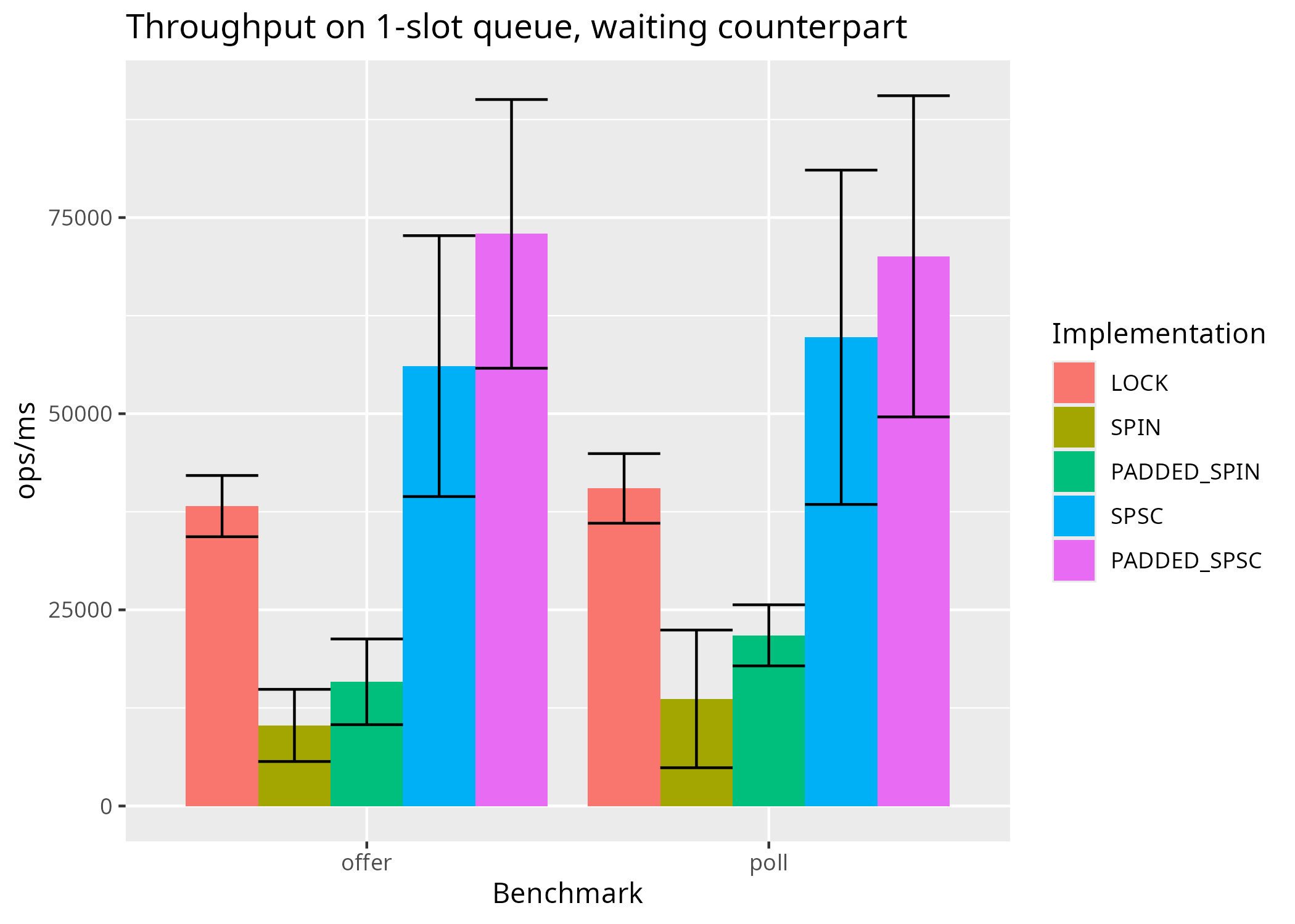

The following shows the results when the counterpart thread waits for free slots and items, using a condition variable (LockQueue), by spinning (SpinQueue) or by parking (SPSCQueue):

Yet again, avoiding false sharing by padding is overshadowed by variability and, if taken as a single factor, there is no proof of its benefit. However, when padding is combined with another factor (e.g., not parking during lock), the combination (PaddedSPSCQueue) does provide a statistically significant improvement to average throughput, when compared with the implementation that lacks both factors (LockQueue).

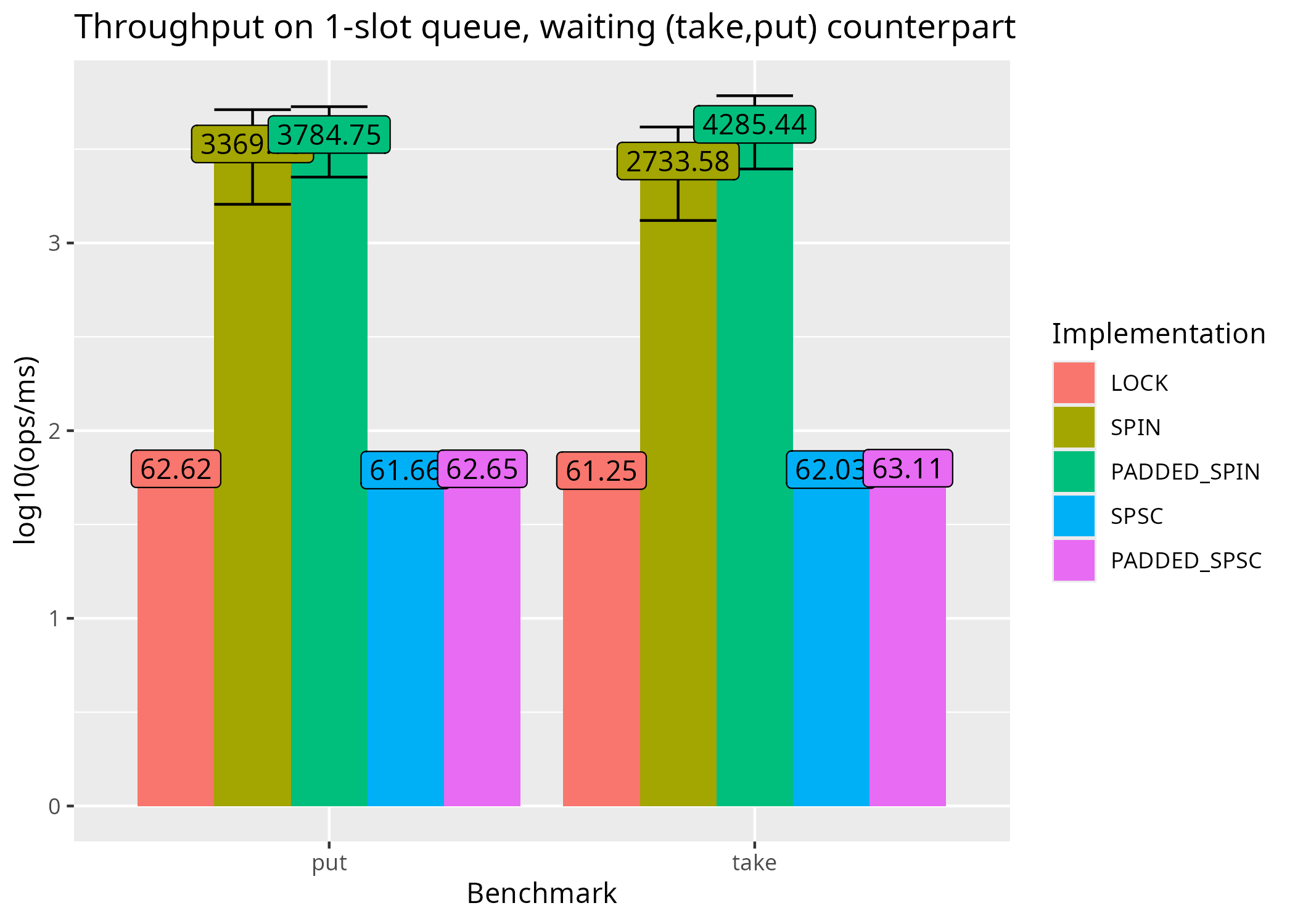

Looking at the benchmarks for take and put, padding once again does not improve the average throughput beyond experimental noise. As discussed in the previous post, the LockSupport.park() latency is more relevant than anything else.

These results evaluate the impact of padding on throughput. False sharing is a hypothesis which could be false if there is some other phenomena happening. A profiler could provide additional evidence that strengthens the hypothesis that there was false sharing and the padding reduced it.

Using perf c2c

There is no profiler for false sharing because profilers and CPUs cannot distinguish whether the sharing was intentional. Nevertheless, the c2c (cache-to-cache) subcommand of the Linux perf utility can gather some data on cache sharing in general. As other profilers JMH can run it on the forked JVMs where the benchmarked code runs. Let’s compare cache metrics for the only scenario where padding alone yielded a statistically significant improvement to performance:

sysctl kernel.perf_event_paranoid=1

sysctl kernel.kptr_restrict=0

java -jar target/benchmarks.jar LockingWithoutLock.poll \

-f 1 -p implementation=SPIN,PADDED_SPIN -p spinningCounterpart=true -p capacity=1 \

-p cooldownSleep=false -prof perfc2c-p cooldownSleep=false is required because JMH relies on computing a delay in milliseconds that

perfwill wait before starting collection. This computed delay will not match the reality ifLockingWithoutLock.setup()sleeps to prevent thermal throttling.

c2c uses CPU-specific features from AMD, Intel and Arm to collect hits (when a load/store was fulfilled at the cache) and misses (when the load/store had to first fetch data into the cache from an upper level). Both L1 cache and to LLC (Last Level Cache) are inspected. A special type of “hit” is also collected by c2c: HITM (Hit Modified), denoting a load or store instruction that would otherwise be a hit, but since the cache line was concurrently modified by another core, there was synchronization between the cores to merge updates or to re-fetch the data.

False sharing increases the number of HITM events. However, an elevated number of HITM events can also be caused by locks, any other form of shared-memory communication, such as queues. In the above benchmark command, for implementation=SPIN, there will be both false and intentional sharing.

The statistics most relevant to this benchmark given by perf c2c are:

| c2c statistic | SPIN | PADDED_SPIN |

|---|---|---|

| Locked Load/Store Operations | 319 | 360 |

| Load Operations | 1493 | 1614 |

| LLC Misses to Local DRAM | 1.8% | 1.1% |

| LLC Misses to Remote cache (HITM) | 98.2% | 98.9% |

| Total Shared Cache lines | 29 | 31 |

| L1D hits on shared lines | 496 | 310 |

| LLC hits on shared lines | 366 | 264 |

| Locked Access on shared lines | 215 | 212 |

These numbers show that both implementations are suffering with writes to locations that sum 1~2KiB.

The numbers for the PADDED_SPIN implementation do show a reduction on the hits to shared cached lines both at L1 cache and LLC (L3). Furthermore, this reduction is bigger than the reduction observed for Locked Access on shared lines. In other words, true sharing (the locked access stemming from the LOCK.compareAndExchange*() calls) remains at a similar volume, but other instances sharing have reduced.

In combination with the throughput results, we now have enough evidence to confirm that there was false sharing and that the padding (the only change between implementations) reduced it.

Since we got to this point, let’s inspect a combination of padding with another factor in LockQueue vs. PaddedSPSCQueue:

java -jar target/benchmarks.jar LockingWithoutLock.queue -f 1 \

-p implementation=LOCK,SPSC,PADDED_SPSC -p capacity=1 \

-p cooldownSleep=false -prof perfc2c| c2c statistic | LOCK | SPSC | PADDED_SPSC |

|---|---|---|---|

| Locked Load/Store Operations | 300 | 483 | 353 |

| Load Operations | 5176 | 5200 | 4531 |

| LLC Misses to Local DRAM | 1.6% | 0.6% | 0.8% |

| LLC Misses to Remote cache (HITM) | 98.4% | 99.4% | 99.2% |

| Total Shared Cache lines | 78 | 61 | 61 |

| L1D hits on shared lines | 290 | 443 | 294 |

| LLC hits on shared lines | 123 | 166 | 133 |

| Locked Access on shared lines | 67 | 114 | 128 |

In this case, numbers show that SPSCQueue has more false sharing than LockQueue. This happens because SPSCQueue fuses into one object what LockQueue uses three objects: the LockQueue itself, the ReentrantLock and the ReentrantLock.NonfairSync. The more compact memory layout increases the probability of false sharing when all queues are sequentially instantiated. In this case padding fixes the fragility that SPSC introduced.