Locking without a Lock

Race conditions have cast insurmountable pain and suffering over mankind since untold times. Today, locks allow programmers to expunge race conditions. However, almost no programmer will happily type new ReentrantLock() and that is not a Java joke.

Locks burden developers with complexity, cause latency, mess-up Instruction-Level Parallelism, and worst of all, cause waiting. There are conceptual and technical tools to avoid and resolve complexity. Things get more challenging for the other consequences of locking: one does not simply print a CPU and waiting is the whole point of a lock.

Trying to write a better ReentrantLock is a fool’s errand. But every implementation has corner cases and every problem can have some corners cut.

This post is the first of a series. After a refresher on the producer/consumer problem and solution, we will look into spinlocks, how good or bad they can be and when they can leave the code purgatory.

Single-Producer, Single-Consumer

This is a subclass of the producer/consumer problem. In the general consumer/producer problem with n producers and m consumers:

+------------+ +---+ take from +------------+

| Producer 1 +------------+ | 1 |<------o--------+ Consumer 1 |

+------------+ | put at +---+ | +------------+

· o------------>| 2 | | ·

· | +---+ | ·

· | | _ | | ·

+------------+ | +---+ | +------------+

| Producer n +------------+ | _ | +--------+ Consumer m |

+------------+ +---+ +------------+

the queueHowever, with a single producer and a single consumer, two coordination points (marked as hollow circles) disappear:

+------------+ +---+ take from +------------+

| Producer 1 +------------+ | 1 |<---------------+ Consumer 1 |

+------------+ | put at +---+ (exclusive +------------+

+------------>| 2 | access to take index)

(exclusive access +---+

to put index) | _ | the queue

+---+ Since there is no producer-producer nor consumer-consumer competition, knowing a problem is SPSC, allows some corner-cutting. If values queued are objects, and null would not be produced, the whole need for a lock can be cut, as JCTools does.

Show me the code

The general version of the producer/consumer problem can be implemented using ReentrantLock as follows:

private final ReentrantLock lock = new ReentrantLock();

private final Condition hasSpace = lock.newCondition();

private final Condition hasItems = lock.newCondition();

private final int[] data;

private int readIdx, size;

@Override public void put(int value) {

lock.lock();

try {

while (size == data.length && !closed)

hasSpace.awaitUninterruptibly();

data[(readIdx+size)%data.length] = value;

++size;

hasItems.signal();

} finally { lock.unlock(); }

}

@Override public int take() {

lock.lock();

try {

while (size == 0 && !closed)

hasItems.awaitUninterruptibly();

int readIdx = this.readIdx, item = data[readIdx];

this.readIdx = (readIdx+1)%data.length;

--size;

hasSpace.signal();

return item;

} finally { lock.unlock(); }

}If restricting the queue capacity to a value n = 2ⁱ, is acceptable,

(readIdx+1)&mask, withmask = capacity-1being a final field, would be faster than(readIdx+1)%data.length.

All the code for this post series is in the argos-blog-jmh GitHub repository. The above code is from LockQueue.java For build and run instructions, see the benchmark section.

Spinlocks

A spin lock is a lock implementation that spins in a tight loop trying to acquire the lock. In general, this is bad because the waiting thread also does not allow another thread to use that CPU core until the OS scheduler preempts the spinning thread, even if the other thread is the one that currently holds the lock.

A flawed spin lock looks like this:

private boolean locked;

public void lock() {

while (!locked)

Thread.onSpinWait(); // nop x86 instruction

locked = true; // WRONG: multiple threads think they have the lock

}There are two problems in the above code. From the point the while reads false in locked until the moment true is written, another thread running the same lock() call may have already observed the same false in locked. Then, both threads will believe they have exclusive access to the critical region protected.

The second issue is that if lock() gets inlined into code that did a previous read or write into locked, the compiler may do the equivalent of surrounding the while with a if (registerX). This causes the same kind of race, but with a larger window of opportunity.

The correct implementation would be:

private final AtomicBoolean locked = new AtomicBoolean();

public void lock() {

while (locked.compareAndExchangeAcquire(false, true))

Thread.onSpinWait();

}compareAndExchangeAcquire provides two guarantees: 1. the call will behave in mutual exclusion with any other method in this AtomicBoolean that changes the value. This is implemented in hardware by the CPU and works even on NUMA systems and on processors whose instruction set has weaker memory ordering such as arm and powerPC. 1. no read operation that appears after this call in the source code will be moved by the compiler or processor to before the compareAndExchangeAcquire call. This is the acquire ordering semantics - Keep in mind that due to JIT, code gets compiled multiple times during execution, leading to changing behavior since each time the compiler can decide to order operations differently according to profiling data and inlining context - Processors also execute machine code out-of-order

The unlock implementation is a simple locked.setRelase(false). In addition to hardware-backed mutual exclusion (aka atomicity), the Release ordering semantics ensures that any writes made by this thread before this call will be visible to any thread that performs an Acquire operation on the same AtomicBoolean instance.

Indirection & VarHandle

Although the impact is insignificant when compared to unbounded spinning, the above implementation involves indirection that could be avoided. Locking consists of: 1. reading the reference locked from address(queue)+fieldOffset("locked"), 2. executing the while (compareAndExchangeAcquire) on address(locked)+fieldOffset("value")

The JVM cannot embed the AtomicBoolean into the Queue object as would be done in C++ because the AtomicBoolean has its own identity as an object.

Fortunately, all operations of Atomic* objects are available through VarHandles. A VarHandle allows atomic operations directly on object fields and is how AtomicBoolean is implemented. As a personal convention, I prefer prefixing plain to names of fields accessed via VarHandles. This makes it explicit that reading the field can be prone to reading old values and writes to the field might not be visible to other threads in the same order as they appear in the Java source code.

import java.lang.invoke.VarHandle;

private static final VarHandle LOCKED;

private boolean plainLocked;

public void lock() {

while ((boolean)LOCKED.compareAndExchangeAcquire(this, false, true))

Thread.onSpinWait();

}Since LOCKED accesses a field, it must receive the container object as first argument in all its methods. All other parameters are the same as in the methods of the equivalent Atomic* class. Another inconvenience is that VarHandle methods are signature polymorphic. Which means the compiler is blind to the types, requiring explicit casts. On the flip side, once the invocation of such methods gets HIT-compiled with optimizations, the JIT compiler can safely elide type checks. Furthermore, nearly all these methods are compiler intrinsics, which will make the JIT (and GraalVM NativeImage) compiler spew native code implementing the methods instead of actually calling them.

However, the weirdest aspect of VarHandles is their initialization:

private static final VarHandle LOCK;

static {

try {

LOCK = MethodHandles.lookup().findVarHandle(SpinQueue.class, "plainLocked", int.class);

} catch (NoSuchFieldException | IllegalAccessException e) {

throw new ExceptionInInitializerError(e);

}

}A spinlocked queue

The spinlocked queue has almost the same fields as the ReentrantLock-backed queue, except there are no Conditions and ReentrantLock lock becomes int plainLock.

private static final VarHandle LOCK; // wraps int plainLock

@SuppressWarnings("unused") private int plainLock;

private final int[] data;

private int readIdx, size;With enough coffee, you can notice that an

plainLockis an int and not aboolean. A boolean would be correct. However, hardware does not implement atomic operations and memory barriers for bytes or bits. Thus, either the compiler or the processor front-end will have to translate byte-sized operations into 4 bytes operations including the bytes neighboring the actual boolean. Using an int makes the memory cost more explicit and sincecompareAndExchangeereturns the observed value instead of whether the operation succeeded, in my opinion, an int yields clearer code.

The put operation is implemented as:

@Override public void put(int value) {

while (true) { // (1) spin for free space

while ((int)LOCK.compareAndExchangeAcquire(this, 0, 1) != 0) // (2) spinlock

Thread.onSpinWait(); // inner spin on lock

try {

if (size < data.length) {

data[(readIdx+size)%data.length] = value;

++size;

break;

}

} finally {

LOCK.setRelease(this, 0); // unlock()

}

}

}The implementation for take is analogous. The full code is in the SpinQueue file.

Without a Condition for “free queue space”, puthas to spin waiting for two things: until it acquires the lock (2) and until there is space in the queue (1). Keep in mind that the absence of a lock() call does not mean absence of deadlocks. An implementation that spins for free space while owning the spinlock will deadlock.

To understand whether this has any use, we need a benchmark.

Benchmarking

The benchmark is built using JMH - the Java Microbenchmark Harness. In addition to providing some comfort features, such as dependency injection, and a design that tries to prevent bad benchmark design, JMH also uses internal APIs to reduce interference from non-deterministic behaviours from the JVM. Another important feature of JMH is stopping the compiler from detecting that your code is useless and should be erased.

The object of benchmarking will be the basic put and take operations on a queue with a single producer and a single consumer. For each of these methods, both a polling and a blocking variant shall be evaluated:

put: Appends a value to the end of the queue. Will block the calling thread if the queue is full, until a consumer frees queue capacity.take: Gets and remove the oldest value in the queue. If the queue is empty will block the calling thread until a producer appends a value.offer: Tries to append a value to the end of the queue. If the queue is full, do nothing and returnfalseIf there was space and the value got appended, returntrue.poll: Gets and remove the oldest value in the queue if the queue is not empty, else returns a special value.

This is the benchmarked code:

@Group("queue") @Benchmark public void put(ProducerState s) {

s.queue.put(s.counter++);

}

@Group("queue") @Benchmark public int take(ConsumerState s) {

return s.queue.take();

}

@Group("queue") @Benchmark public int poll(ConsumerState s) {

return s.queue.poll(0);

}

@Group("queue") @Benchmark public boolean offer(ProducerState s) {

return s.queue.offer(s.counter++);

}JMH will furiously invoke these methods over a fixed time span from at least 4 threads, with each thread always calling the same method with a ConsumerState or ProducerState that is exclusive to that thread. Each of these time spans yields an iteration.

A put benchmark thread cannot share a queue with a take benchmark thread because JMH does not coordinate the decision of when an iteration ends (coordinating that could interfere in results), leaving one thread starved. Therefore, the @Setup and @TearDown-annotated methods in the *State objects manage the lifecycle of a counterpart thread for every benchmark thread. The counterpart thread executes a blocking operation: put for take and poll benchmarks and take for put and offer benchmarks. The use of such counterpart threads, requires that JMH be instructed to use only half of available CPU cores using the -t command-line option.

Each method is measured in 10 iterations with a runtime of 100ms per iteration. Before these measurements, the same process happens for 10 iterations of 200ms with the goal of warmup. Warmup allows the JIT compiler to profile and optimize the code being benchmarked. Since this will be likely run on notebooks, LockingWithoutLock.setup() will sleep in between iterations to avoid thermal throttling for benchmark scenarios that are scheduled to run later.

From the command-line:

git clone https://github.com/alexishuf/argos-blog-jmh.git

cd argos-blog-jmh

./mvnw package -DskipTests=true

java -jar target/benchmarks.jar LockingWithoutLock.queue -t 4 -p implementation=LOCK,SPIN -p capacity=1,4096Use the

-hto get JMH options help

Is this any good?

The choice of capacity values in the command line is not random. capacity=1 leads to higher contention with producers frequently waiting for their consumers and vice versa. On the other extreme, capacity=4096 tries to allow for producer and consumers to work more before waiting for one another.

Starting from larger queues and looking at overall operations per second, SpinQueue and LockQueue are tied with 58400.401 and 64264.179 operations per millisecond. This is only a tie in the sense that repeating the experiment on the same machine at same starting temperature with same background processes will yield average throughput values for which the confidence intervals ( obtained by adding and subtracting the error) will overlap. Among individual operations, results point to LockQueue delivering higher and more predictable, throughput when methods are compared individually, except for a tie on take, which could be circumstantial.

Combined with complexity, variance and the potential for higher energy usage, SpinQueue should remain in the purgatory.

On the other end, something interesting happens with take and put in LockQueue. The small queue makes increases the number of times take has to wait for an item and the number of times put has to wait for the signle slot in the queue to be free. In this scenario, LockQueue throughput declines much more than it does for SpinQueue.

Benchmark (capacity) (cooldownSleep) (implementation) Mode Cnt Score Error Units

LockingWithoutLock.queue:put 1 true LOCK thrpt 30 69.050 ± 2.277 ops/ms

LockingWithoutLock.queue:take 1 true LOCK thrpt 30 68.102 ± 3.317 ops/ms

LockingWithoutLock.queue:put 1 true SPIN thrpt 30 2396.060 ± 1286.064 ops/ms

LockingWithoutLock.queue:take 1 true SPIN thrpt 30 3284.775 ± 2147.780 ops/msReentrantLock.lock() will always start by behaving as a spinlock and will attempt twice to acquire the lock upon entry, first by calling initialTryLock() then calling tryAcquire() at AbstractQueuedSynchronizer.acquire(1). After that, it may try again again before entering a linked queue of waiting threads and once it has enqueued the current thread, it will release the CPU using LockSupport.park(). In the case of native threads (not virtual), this will involve a syscall to make the OS scheduler unmark the thread as runnable, allowing other threads to use that CPU core. The thread will wake from park() once unlock() sees it queued and calls LockSupport.unpark() for the waiting thread. In the case of Condition.awaitUninterruptibly() the code path leading to park() is shorter: lock state is preserved locally for the calling thread, the lock is completely released and the thread park()s.

When can it leave the purgatory?

What these results hint is that the latency of ReentrantLock.lock() and Condition.awaitUninterruptibly() amounts to most of the latency in take() and park(). Given these methods were not an issue on larger-capacity queues, LockSupport.park(), which implements actual waiting becomes the prime suspect.

In general, LockSupport.park() will have a large latency because it waits for something. In general, replacing park with spinning will only preserve most of the latency for that thread, but will also increase latency to all other threads because the CPU is busy doing useless work.

The latency of take()is determined not only by its code but by these actions and events of the counterpart thread that will put(item):

- being assigned a CPU slice,

- creating an item to be put

- acquiring the lock,

- adding the item into the queue (writing the reference, updating counters),

- releasing the lock and calling

LockSupport.unpark()

The benchmark results show that all code that is common to LockQueue.take() and SpinQueue.take() as well as the above 5 events/actions pertaining the counterpart thread happen on average 3284.775 times per millisecond, yielding an average latency of 0.304us. Since LockQueue.take() waits for the same events/actions of the counterpart thread and performs the same bookkeeping actions as SpinQueue.take(), the benchmark shows that LockQueue.take() spends 14.471us - 0.304us = 14.167us or 96.4% of its time on ReentrantLock.lock()/unlock(), which wrap the park()/unpark() calls.

When SPSC appears in real applications, all bookkeeping in take/put tends to be negligible, latency-wise. The first 2 events pertaining the counterpart being scheduled and producing/consuming an item, are only fast on few applications. Notice that throughput in this benchmark is measured by milliseconds and if the producer were producing requests to a server, it would take 69,104 requests per second per thread to have one request ready for take() every 14.471us. Also note that in such scenario no sane person would be using a 1-slot queue.

Stock trading is famous for high-throughput with low latency requirements. Such high throughput can naturally occur in simulation, scientific and optimization software where work items are spontaneously generated. Some batch processing systems can also see throughput beyond what I/O hardware capabilities allow in between stages of a processing pipeline.

Implementing a spinlock just to test whether it will improve throughput is not the smartest use of time. Neither is sprinkling tryLock() calls throughout a large code base. Fortunately, the presence of frequent and short thread park events correlates with the counterpart producer/consumer being able to sustain a throughput high enough to make LockSupport.park() a bottleneck.

Java Flight Recorder has a ThreadPark event that is exactly what is needed. However, this event is collected by sampling and the flight recorder will miss the shortest parks that could be replaced with spinning. However, looking at a recording and seeing park events above 1ms is a quick way to discard spinning as possibility.

The alternate approach is to use async-profiler , which despite being a sampling CPU profiler, does employ instrumentation to profile locks. It can be run on real applications, but since this is a benchmark, JMH can run it on the forked JVMs:

java -jar target/benchmarks.jar LockingWithoutLock.queue \

-t 4 -p implementation=LOCK -p capacity=1 \

-prof async:libPath=/path/to/async-profiler/lib/libasyncProfiler.so;output=jfr;event=lockThis will generate jfr-lock.jfr file inside a directory whose name is the fully qualified class name of the benchmark followed by all benchmark parameters. In this case:

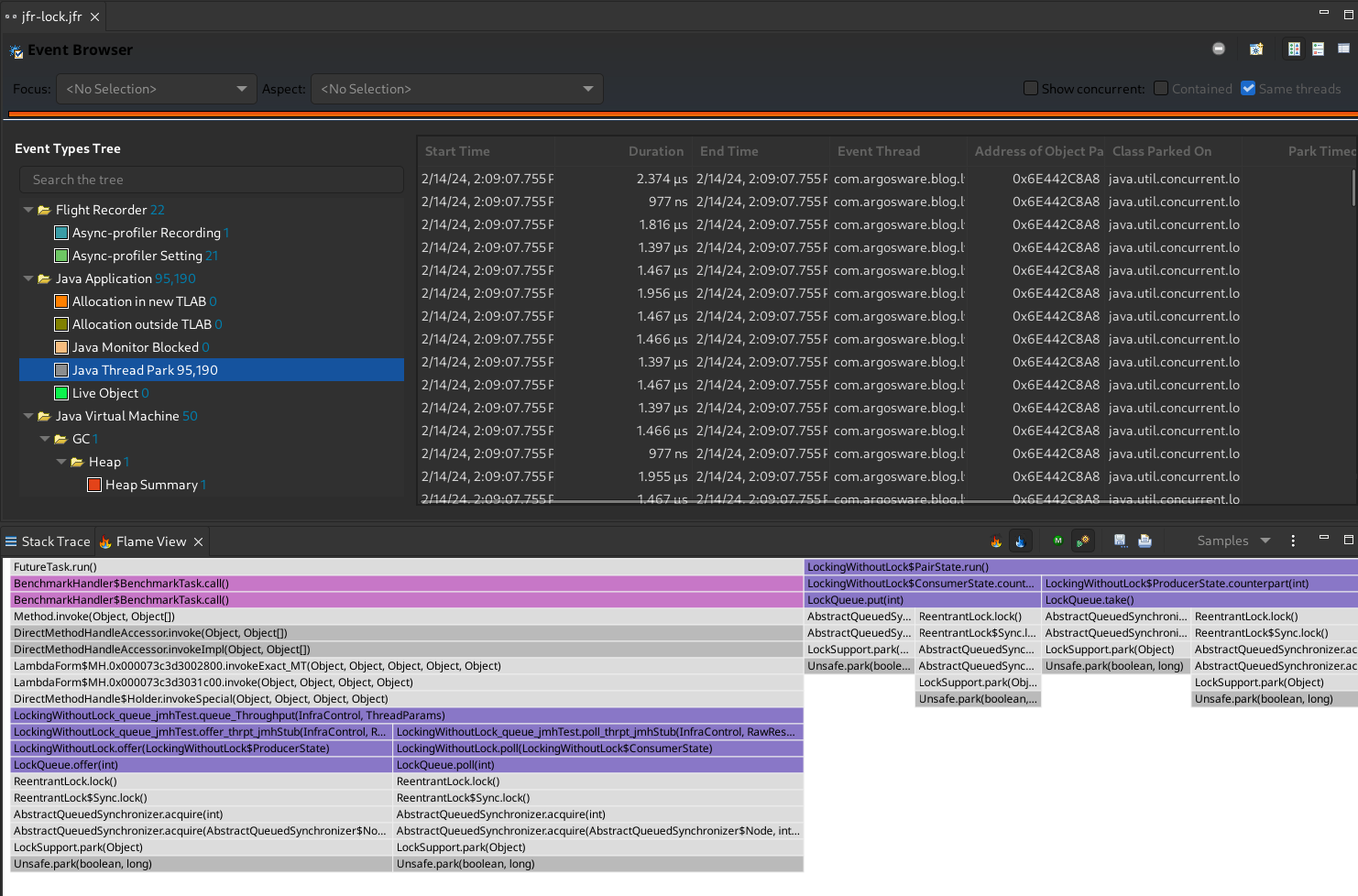

com.argosware.blog.lwl.LockingWithoutLock.queue-Throughput-capacity-1-cooldownSleep-true-implementation-LOCK/jfr-lock.jfrAfter loading the file into Java Mission Control, Event Browser -> Java Application -> Java Thread Park will show a barrage of threads parking for less than 2 microseconds. By not selecting any particular event, the flame (or icicle) graph bellow will aggregate all park events. The widest “flame” is where most time is being wasted.

Do not use this in production (yet). In the next episode, it will get faster